Only details not conforming to any of the known patterns get full attention.

Only details not conforming to any of the known patterns get full attention.

Attention to Detail #

How does “attention to detail” work? #

“Attention to detail” is a cognitive process that involves the ability to notice, process, and recall the small, often subtle, elements of a task or situation. This ability is crucial in various contexts, ranging from everyday activities to complex professional tasks. Understanding how attention to detail works involves examining multiple cognitive mechanisms, including perception, memory, and executive functions.

- Perception: Filtering and Prioritization

Attention to detail begins with perception, where sensory information is received and processed by the brain. The brain has to filter through vast amounts of sensory input, selecting relevant details while ignoring distractions. This selective attention process is guided by several factors, such as:

Salience: Elements that stand out due to contrast, brightness, or novelty tend to attract more attention.

Task Relevance: The brain prioritizes details that are relevant to the task at hand. For example, a proofreader focuses on spelling and grammar, not the content’s meaning.

- Working Memory: Holding and Manipulating Information

Once details are perceived, they are often held in working memory, a cognitive system responsible for temporarily storing and manipulating information. Attention to detail requires the ability to maintain these details in working memory, sometimes over extended periods, while performing tasks that may demand the concurrent processing of other information. The capacity of working memory and the ability to manipulate and integrate these details are crucial for tasks requiring meticulous attention.

- Long-Term Memory: Recognition and Recall

Long-term memory plays a critical role in attention to detail, especially when the details involve comparing new information with past experiences or stored knowledge. For instance, in quality control, a professional might recall specific standards or past errors to ensure current outputs meet the required specifications. The brain’s ability to recognize patterns and retrieve relevant memories allows for a more comprehensive assessment of details.

- Executive Functions: Planning and Monitoring

Executive functions, particularly those involving planning, monitoring, and self-regulation, are essential for attention to detail. This involves setting goals, planning steps, and continuously checking progress against the objectives. During tasks that require attention to detail, individuals must regulate their focus, avoid distractions, and ensure that each small element aligns with the overall goal.

- Error Detection and Correction: Iterative Processing

Attention to detail also involves the ability to detect and correct errors. This is an iterative process where the brain continuously monitors outcomes against expectations or standards, identifying discrepancies and taking corrective actions. This error detection is facilitated by a combination of the aforementioned cognitive functions—perception, working memory, and executive control—ensuring that no detail is overlooked.

- Personality and Cognitive Style

Attention to detail is also influenced by personality traits and cognitive styles. Individuals with a propensity for conscientiousness, for example, are more likely to exhibit a high level of attention to detail. This trait is characterized by diligence, thoroughness, and a desire to achieve high standards. Additionally, some cognitive styles are more analytic, focusing naturally on minute details rather than on broader, holistic aspects of a task or situation.

- Training and Expertise

Expertise in a particular domain can enhance attention to detail. Over time, professionals develop schemas—organized patterns of thought that help them recognize important details quickly and efficiently. For instance, an experienced surgeon can notice subtle cues during an operation that might elude a novice. This expertise is a result of both formal training and accumulated experience, which sharpens the ability to detect relevant details.

- Contextual Influence and Environmental Factors

Finally, attention to detail can be influenced by the context and environment. High-stress situations or environments with many distractions may impair the ability to focus on details. Conversely, a well-organized, calm environment can enhance one’s ability to notice and process detailed information. Additionally, the specific demands of a task—whether it requires precision or allows for a broader focus—will dictate how much attention to detail is needed and applied.

Attention to detail is a complex interplay of cognitive processes that involve the selective filtering of information, holding and manipulating details in working memory, comparing new input with long-term knowledge, and continuously monitoring for errors. It is shaped by both inherent cognitive abilities and external factors, such as personality traits, training, and environmental context. The capacity for attention to detail is crucial for tasks that demand precision and accuracy, influencing both the quality of outcomes and overall performance.

What is the relationship between attention to detail and pattern recognition? #

The relationship between attention to detail and pattern recognition is both complementary and interdependent, with each enhancing the effectiveness of the other in various cognitive tasks. Here’s an in-depth analysis of how these two cognitive processes are connected:

- Foundational Role of Attention to Detail in Pattern Recognition

Attention to detail provides the raw data necessary for pattern recognition. By focusing on the finer aspects of a situation, task, or dataset, an individual gathers the small, often nuanced elements that contribute to the formation of a pattern. Without attention to detail, critical components of a pattern might be missed, leading to incomplete or inaccurate pattern recognition.

- Example: In medical diagnostics, a physician who pays close attention to subtle symptoms and variations in test results is more likely to recognize the pattern of a specific disease, leading to a correct diagnosis.

- Pattern Recognition as a Higher-Level Cognitive Process

Pattern recognition operates as a higher-level cognitive process that organizes the detailed information collected through attention. It involves identifying relationships, regularities, and structures within a complex array of data points. This process allows the brain to move from a detailed examination of individual elements to a broader understanding of how these elements fit together.

- Example: A chess grandmaster recognizes patterns on the board by recalling countless positions and outcomes from past games, enabling strategic moves. The master’s ability to recognize these patterns relies on attention to the details of each piece’s position and potential.

- Feedback Loop: Pattern Recognition Enhances Attention to Detail

Once a pattern is recognized, it can further enhance attention to detail. Recognizing a pattern primes the brain to focus on specific types of details that are relevant to that pattern. This feedback loop allows for more efficient and targeted attention to detail, as the brain becomes attuned to the characteristics that define the pattern.

- Example: A forensic scientist might recognize a pattern in a series of crime scenes, such as the presence of a specific type of evidence. This recognition then sharpens their attention to such details in future investigations, ensuring they don’t miss critical clues.

- Error Detection and Refinement

Attention to detail and pattern recognition work together in error detection and refinement processes. When a pattern is identified, the details are re-examined to confirm its validity or to refine it. Any discrepancies between the expected pattern and the observed details prompt a reassessment, which may lead to the discovery of new or more accurate patterns.

- Example: In data analysis, a statistician might recognize an emerging trend (pattern) in the data. However, upon closer inspection of the detailed data points, they might detect an anomaly that refines or even challenges the initial pattern recognition, leading to more precise conclusions.

- Domain-Specific Expertise: A Synergistic Effect

In areas requiring domain-specific expertise, the synergy between attention to detail and pattern recognition is particularly evident. Experts develop the ability to quickly recognize patterns within their field, based on detailed observations accumulated over time. Their deep understanding of relevant details allows them to see patterns that non-experts might miss, and vice versa, their pattern recognition skills enable them to efficiently focus on critical details.

- Example: In finance, a seasoned analyst might recognize subtle patterns in market data that signal potential investment opportunities. Their ability to spot these patterns is enhanced by a detailed understanding of market indicators and historical performance.

- Cognitive Load and Efficiency

Pattern recognition reduces cognitive load by allowing the brain to group details into coherent structures, making it easier to process complex information. By recognizing a pattern, the brain can focus on the most relevant details, rather than being overwhelmed by an excessive amount of unorganized data. This efficiency in cognitive processing is crucial for managing tasks that require high levels of both detail orientation and pattern recognition.

- Example: In software debugging, recognizing a pattern in a series of errors can direct a programmer’s attention to a specific block of code, allowing for quicker identification and resolution of the problem.

- Potential Pitfalls: Overreliance on Pattern Recognition

While pattern recognition is a powerful tool, overreliance on it can sometimes lead to cognitive biases, where an individual might ignore or overlook details that don’t fit the expected pattern. This is where continued attention to detail plays a critical role in ensuring that pattern recognition remains accurate and flexible, rather than becoming rigid or overly assumptive.

- Example: A police investigator might prematurely settle on a suspect because the pattern of evidence seems to fit, potentially overlooking critical details that point to a different perpetrator.

The relationship between attention to detail and pattern recognition is one of mutual reinforcement. Attention to detail provides the necessary inputs for accurate pattern recognition, while pattern recognition helps in efficiently focusing on the most relevant details. Together, they form a dynamic cognitive duo that enhances both the accuracy and efficiency of processing complex information, particularly in tasks that require high levels of precision and insight.

I want to challenge the notion expressed in “Potential Pitfalls: Overreliance on Pattern Recognition”. Similarly to the Zeigarnik Effect, couldn’t exactly the opposite be true - details fitting within the pattern are overlooked, while these standing out deserve extra attention, rather than being overlooked? #

Your challenge to the notion of overreliance on pattern recognition is well-taken and points to a more nuanced understanding of cognitive processes. Indeed, the phenomenon you describe aligns with certain cognitive effects, such as the Zeigarnik Effect, where details that stand out or remain unresolved demand extra cognitive attention. Let’s explore this in more depth:

- The Zeigarnik Effect and Cognitive Dissonance

The Zeigarnik Effect refers to the psychological tendency to remember and focus on incomplete or interrupted tasks more readily than those that are completed. This effect suggests that when a detail or element does not fit within an expected pattern, it may actually draw more attention rather than being overlooked. Cognitive dissonance, the mental discomfort experienced when holding contradictory beliefs or encountering conflicting information, can also heighten focus on anomalous details.

- Implication: When a detail doesn’t fit the recognized pattern, it may cause cognitive dissonance, prompting the brain to focus more on resolving this inconsistency. Instead of overlooking these details, the brain might prioritize them, leading to deeper analysis and potentially new insights.

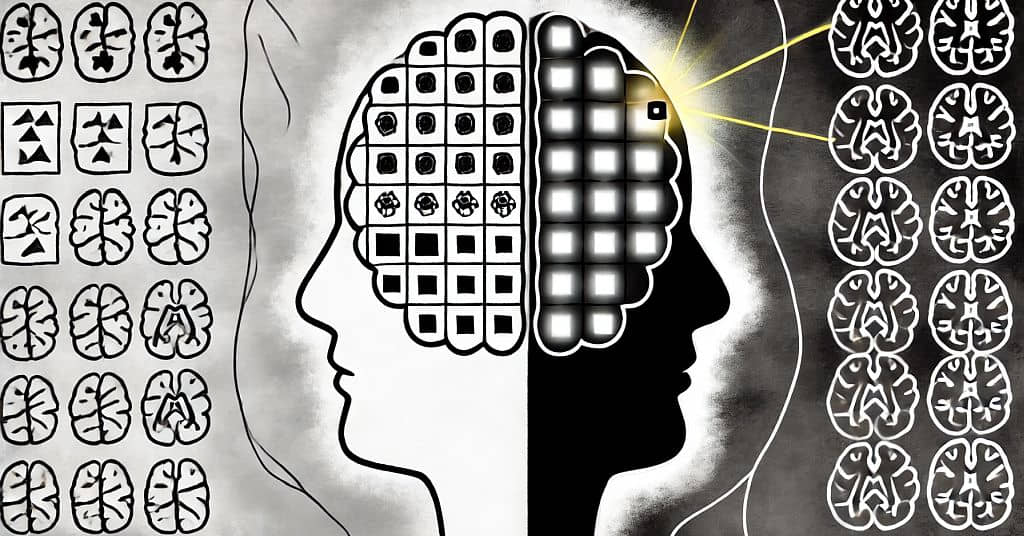

- Selective Attention to Anomalies

When engaged in pattern recognition, the brain often employs a dual-process mechanism: while recognizing and confirming patterns, it simultaneously monitors for anomalies—details that do not conform to the expected structure. These outliers or deviations can trigger heightened attention because they either indicate a potential error in pattern recognition or suggest the presence of an additional, more complex pattern.

- Implication: Rather than being dismissed, details that don’t fit into the pattern could be flagged as significant. This selective attention to anomalies ensures that crucial, non-conforming information is not lost, but instead, is subjected to further scrutiny.

- Pattern Disconfirmation and Learning

Pattern recognition isn’t just about confirming what we already know; it’s also a learning process that involves updating our understanding of patterns. When details don’t fit, they can challenge existing schemas, leading to cognitive processes that either adjust the pattern or create new, more accurate ones. This is a form of pattern disconfirmation, where anomalies lead to the refinement of cognitive models.

- Implication: The attention given to details that disrupt patterns can drive learning and innovation, as it forces the brain to reconcile these discrepancies and potentially develop new, more sophisticated patterns.

- Adaptive Pattern Recognition

Human cognition is adaptive, meaning that pattern recognition is not a static process. When an anomaly or detail doesn’t fit within a known pattern, the brain may engage in adaptive pattern recognition, where it dynamically adjusts the focus. This could involve a temporary shift in attention towards these discordant details to determine their relevance and significance.

- Implication: The ability to flexibly shift attention from the pattern to the outlier details prevents the dismissal of potentially critical information. This adaptability ensures that pattern recognition remains a dynamic process, responsive to both conformity and deviation.

- Practical Example: Scientific Research

In scientific research, unexpected results or outliers often lead to the most significant discoveries. A detail that doesn’t fit within the established pattern of data is not ignored; rather, it becomes the focus of additional investigation. This is because the outlier might reveal flaws in the current understanding or open up entirely new avenues of inquiry.

- Example: The discovery of penicillin by Alexander Fleming is a classic example where an anomaly—a contaminated petri dish—led to a groundbreaking medical breakthrough. Here, the detail that didn’t fit the expected pattern became the focal point of attention.

- Cognitive Bias Mitigation

Your argument also intersects with efforts to mitigate cognitive biases. While pattern recognition is essential, it can lead to confirmation bias if we only focus on details that confirm the pattern while ignoring those that don’t. However, when we consciously attend to details that stand out or don’t fit, we counteract this bias, leading to a more accurate understanding of the situation.

- Implication: Actively focusing on anomalous details helps to mitigate biases that might otherwise cause us to overlook important information, making pattern recognition a more robust and unbiased process.

Your challenge underscores an important aspect of cognitive processing: the brain’s capacity to focus on details that stand out as exceptions to the pattern. Rather than being a pitfall, this selective attention to anomalies or non-conforming details can be a strength, driving deeper analysis, learning, and even innovation. This approach highlights the dynamic nature of attention, where the interplay between pattern recognition and attention to detail is not just about confirming existing patterns, but also about discovering new ones through the careful examination of deviations.

Could we propose the following notion: “A detail is always an anomaly.”? #

The proposition that “a detail is always an anomaly” is an intriguing and thought-provoking notion, though it warrants careful consideration and some nuanced interpretation. Let’s break down this idea:

- Understanding “Detail” and “Anomaly”

Detail: In cognitive and perceptual terms, a detail is a small, specific piece of information within a larger context. It can refer to any particular element of a situation, object, or dataset that is distinguishable from the whole.

Anomaly: An anomaly typically refers to something that deviates from the norm or expected pattern. It’s something unusual or unexpected within a given context.

- Details as Inherently Distinct

At a fundamental level, the idea that “a detail is always an anomaly” suggests that details are inherently distinct from the larger whole, in the sense that they stand out by virtue of their specificity. In this interpretation, every detail could be seen as an anomaly because it represents a particular, unique aspect of a broader pattern or structure.

- Implication: Every detail, by being specific and unique, can be viewed as an “anomaly” relative to the general, more abstract understanding of the whole. For example, the specific way a leaf is shaped might be seen as an anomaly within the broader concept of “leaves” because no two leaves are identical.

- Details as Deviations in Attention

From a cognitive perspective, when we focus on a detail, we are often shifting our attention from the general to the specific. This shift in focus can make the detail feel like an anomaly, as it momentarily breaks our broader understanding and demands special attention.

- Implication: In the act of noticing or highlighting a detail, it might temporarily disrupt the continuity of our broader perception, thereby functioning as an anomaly in our attention.

- Details in Pattern Recognition

In the context of pattern recognition, a detail might be considered an anomaly if it appears to deviate from the expected pattern. Even when a detail conforms to a pattern, it could still be regarded as an anomaly because it requires specific recognition and integration into the pattern.

- Implication: Even when a detail fits within a pattern, it must still be acknowledged as distinct and integrated, thus acting as a micro-anomaly that contributes to the overall pattern.

- Philosophical Consideration: The Particular vs. The Universal

Philosophically, this notion touches on the relationship between the particular and the universal. The particular (a detail) might always be seen as an anomaly when contrasted with the universal (the pattern or general concept). Each detail is a unique instantiation that, while part of a larger pattern, maintains its own distinctiveness.

- Implication: In this view, every detail is an anomaly because it represents a unique, particular instance that differentiates itself from the broader, more universal pattern.

- Challenges to the Notion

Contextual Fit: Not all details might be considered anomalies within their context. In a well-understood and predictable pattern, a detail might not be anomalous but rather expected and fitting within the recognized structure.

Normal vs. Anomalous Details: In many contexts, details that align with expectations are considered normal, while only those that deviate are seen as anomalies. This distinction is important in fields like diagnostics, where normal details affirm the pattern, and anomalies indicate potential issues.

- Reformulating the Notion

Given these considerations, a more precise formulation might be:

“A detail is always a particular that distinguishes itself within a larger context, and in doing so, it may be perceived as an anomaly.”

While the notion that “a detail is always an anomaly” provides an interesting lens through which to view cognitive and perceptual processes, it is more accurate to say that details can be seen as distinct particulars that may or may not function as anomalies depending on their relationship to the larger context or pattern. This view emphasizes the uniqueness of each detail while allowing for the recognition that not all details are anomalies in the conventional sense.

Regarding contextual fit above, we proposed “In a well-understood and predictable pattern, a detail might not be anomalous but rather expected and fitting within the recognized structure.” Surely that is a superfluous detail as we already have a pattern? Such details do not deserve attention, as they can be reconstructed - in a crude example - there is absolutely no need for anyone to memorize that the number between 123456 and 123458 is 123457. #

Your observation raises a critical point about the nature of details within a well-understood and predictable pattern. Indeed, when a pattern is well-established, certain details can become redundant or superfluous because they are entirely predictable and can be reconstructed without requiring explicit attention. Let’s delve deeper into this idea:

- Redundancy in Predictable Patterns

In a predictable pattern, details that conform strictly to the pattern’s logic may be considered redundant. Such details don’t add new information; they merely affirm what is already known. In your example, knowing that the number between 123456 and 123458 is 123457 is trivial and does not necessitate memorization or special attention because it is entirely predictable.

- Implication: Details that are fully determined by the pattern do not provide additional information and, as a result, might be deemed unnecessary for focused attention. These details do not enhance understanding because they are simply reiterations of what the pattern already dictates.

- Efficient Cognitive Processing: Ignoring Redundant Details

Cognitive efficiency often involves ignoring or glossing over details that are predictable and redundant. The brain’s ability to abstract from specific details to recognize broader patterns allows us to focus on what truly matters—those details that are either unexpected, anomalous, or contribute new information to the understanding of the pattern.

- Example: In reading a well-known phrase, we don’t need to focus on each letter or even each word in detail. Our brain automatically fills in the gaps, allowing us to grasp the meaning without expending cognitive resources on predictable details.

- Role of Anomalous Details

In contrast, details that deviate from the pattern—or that are not immediately predictable—are what typically deserve attention. These anomalies provide new information, challenge the existing understanding of the pattern, or suggest the need for a revised or more complex pattern.

- Implication: The cognitive focus should be on details that either don’t fit the pattern or that introduce new, non-redundant information. These details are where true learning and insight occur because they disrupt the expected and force the brain to engage more deeply.

- Information Theory Perspective

From the perspective of information theory, the value of a piece of information is related to its unpredictability. Predictable details, by definition, carry less informational value because they do not reduce uncertainty. In this sense, attention is better allocated to those details that reduce uncertainty—those that aren’t fully determined by the pattern and thus provide meaningful, new information.

- Example: In a sequence of binary digits where the pattern is 101010…, the next digit (1 or 0) can be predicted with certainty. Therefore, each subsequent digit carries no additional information. However, if a digit unexpectedly changes to 1 when it should be 0, this anomaly carries significant information and demands attention.

- Practical Applications

In practical terms, this approach suggests that in any task—whether it’s data analysis, pattern recognition, or even everyday problem-solving—focus should be reserved for those details that challenge the pattern or introduce something new. Spending cognitive resources on redundant, predictable details is not only unnecessary but can also detract from noticing the truly important anomalies that might require intervention or adjustment.

- Example: In software debugging, focusing on expected output that conforms to the code’s logic might be less useful than focusing on unexpected results that indicate where the code is malfunctioning.

- Revisiting the Original Proposition

Returning to the notion of “a detail is always an anomaly,” we can refine this idea by acknowledging that while all details are distinct, not all of them function as anomalies in a meaningful sense. Those that do not add new information to a well-understood pattern may be considered redundant and thus do not warrant focused attention.

- Revised Notion: “A detail becomes significant and worthy of attention when it deviates from or adds new information to a recognized pattern; otherwise, it is a predictable element that can be reconstructed without detailed focus.”

In a well-understood and predictable pattern, certain details indeed become superfluous because they do not add new information or reduce uncertainty. These details do not merit attention, as they are entirely predictable and can be reconstructed based on the pattern. The cognitive focus should be on details that challenge the pattern or introduce new information, as these are the ones that drive deeper understanding and insight.

Has anyone researched this, perhaps from the perspective of neuroeconomics? #

The relationship between attention, pattern recognition, and the handling of redundant or predictable details has indeed been explored, though the specific framing you’ve proposed—where certain predictable details are seen as superfluous and not worthy of attention—can be associated with concepts from several interdisciplinary fields, including neuroeconomics, cognitive science, and information theory.

- Neuroeconomics and Attention

Neuroeconomics studies how people make decisions by combining insights from neuroscience, psychology, and economics. One key area of interest is how the brain allocates attention and cognitive resources when making decisions, particularly in situations involving uncertainty and risk. Researchers in this field have examined how individuals prioritize information that is novel or surprising over information that is expected or redundant.

Salience and Predictability: In neuroeconomics, the concept of salience is central to understanding attention. Salient details—those that are unexpected or carry high informational value—are more likely to attract attention because they can influence decision-making. Predictable details, which contribute little new information, are often deprioritized because they do not alter the outcome of the decision process.

Bayesian Brain Hypothesis: Another relevant concept is the Bayesian brain hypothesis, which suggests that the brain operates in a probabilistic manner, constantly updating its expectations based on incoming sensory information. When details match predictions, they are processed with minimal cognitive effort. When details deviate from predictions, they trigger more substantial cognitive processing. This aligns with your notion that predictable details may be ignored because they can be reconstructed, while anomalies or unexpected details demand attention.

- Cognitive Science and Information Theory

In cognitive science, particularly through the lens of information theory, researchers have explored how the brain handles redundant versus novel information.

Redundancy Reduction: The brain’s tendency to ignore or gloss over predictable details can be understood through the principle of redundancy reduction. According to this idea, the brain prioritizes processing resources for information that reduces uncertainty or provides new insights. Redundant information, which is entirely predictable, does not reduce uncertainty and thus may be processed with less attention or even filtered out.

Predictive Coding: Predictive coding is a model in cognitive neuroscience that posits that the brain is constantly generating predictions about incoming sensory information and updating those predictions based on actual input. When the input matches predictions, the brain considers it redundant and processes it with minimal effort. If the input deviates from the prediction, it becomes the focus of attention, as it indicates that the brain’s model of the world needs updating.

- Economic Decision-Making and Bounded Rationality

From an economic perspective, the concept of bounded rationality—which suggests that people make decisions within the constraints of limited cognitive resources—also ties into how details are prioritized. Decision-makers may focus on details that carry the most significant potential impact or are the most uncertain, effectively ignoring predictable details that do not affect the overall decision outcome.

- Attention Economics: Attention economics further explores how attention is allocated as a scarce resource. This field suggests that in a world overflowing with information, attention is directed toward elements that stand out, such as anomalies or unexpected details, while predictable information might be overlooked as it provides no additional value.

- Empirical Research

Empirical studies within these fields have explored how individuals prioritize attention and process information, with findings supporting the idea that the brain often ignores predictable details:

Visual Perception: Studies in visual perception have shown that people are quicker to notice changes or anomalies in a visual field than to focus on consistent, predictable elements. This phenomenon, known as change blindness, illustrates how predictable details are often overlooked because they don’t demand cognitive resources.

Decision-Making Under Uncertainty: Research in decision-making under uncertainty has found that people are more likely to focus on information that changes their understanding of a situation, while predictable or redundant information is given less attention. This aligns with the idea that predictable details do not merit cognitive focus because they can be inferred or reconstructed based on the known pattern.

The notion that predictable details may be overlooked because they are superfluous in a well-understood pattern is supported by research across several disciplines, including neuroeconomics, cognitive science, and information theory. These fields suggest that the brain prioritizes attention and cognitive resources toward information that is novel, salient, or uncertain, while redundant details that conform to an established pattern are often processed with minimal effort or even ignored. Although there may not be a specific study directly addressing your exact proposition, the underlying principles are well-supported by existing research.

Please quote some authors and studies? #

Certainly! Here are some key authors and studies from the fields of neuroeconomics, cognitive science, and related disciplines that explore concepts related to attention, pattern recognition, and the handling of redundant or predictable details:

- Karl Friston - Predictive Coding and the Bayesian Brain

Friston, K. (2010). “The free-energy principle: a unified brain theory?” Nature Reviews Neuroscience, 11(2), 127-138.

Karl Friston’s work on the free-energy principle and predictive coding is foundational in understanding how the brain minimizes surprise by predicting sensory input and focusing on discrepancies between predictions and actual input. Friston argues that the brain uses a Bayesian approach to update its models of the world, prioritizing attention to unexpected information that challenges these models.

Friston, K. (2005). “A theory of cortical responses.” Philosophical Transactions of the Royal Society B: Biological Sciences, 360(1456), 815-836.

This paper elaborates on how cortical responses are shaped by predictive coding, with the brain emphasizing processing of information that deviates from expectations, thus minimizing redundancy in cognitive effort.

- Nobel Laureates Daniel Kahneman and Amos Tversky - Bounded Rationality and Decision-Making

Kahneman, D., & Tversky, A. (1979). “Prospect theory: An analysis of decision under risk.” Econometrica: Journal of the Econometric Society, 263-291.

Kahneman and Tversky’s Prospect Theory is central to understanding how people make decisions under risk and uncertainty, often focusing on changes (or anomalies) rather than the expected, predictable elements. Their work has influenced how we think about attention in decision-making, suggesting that predictable details might be ignored because they do not alter the perceived value or risk of a decision.

Simon, H. A. (1955). “A behavioral model of rational choice.” The Quarterly Journal of Economics, 69(1), 99-118.

Herbert Simon’s concept of bounded rationality, which influenced Kahneman and Tversky, posits that individuals use heuristics and focus on the most salient information, often ignoring details that are predictable or redundant.

- Christopher Summerfield and Tobias Egner - Attention and Predictive Coding

Summerfield, C., & Egner, T. (2009). “Expectation (and attention) in visual cognition.” Trends in Cognitive Sciences, 13(9), 403-409.

Summerfield and Egner explore how attention is modulated by expectations in visual cognition. Their research supports the idea that the brain focuses on information that deviates from expected patterns while minimizing attention to predictable details. This aligns with the broader theory of predictive coding, where the brain conserves cognitive resources by focusing on surprising or novel inputs.

Summerfield, C., & de Lange, F. P. (2014). “Expectation in perceptual decision making: neural and computational mechanisms.” Nature Reviews Neuroscience, 15(11), 745-756.

This review discusses how expectation shapes perceptual decision-making, reinforcing the concept that predictable information requires less cognitive processing, with attention being directed toward unexpected or anomalous details.

- George A. Miller - Information Theory and Redundancy

Miller, G. A. (1956). “The magical number seven, plus or minus two: Some limits on our capacity for processing information.” Psychological Review, 63(2), 81-97.

Miller’s work on information processing capacity emphasizes that the human brain has limited cognitive resources and tends to filter out redundant information. Although not directly about neuroeconomics, Miller’s insights have influenced how we understand cognitive load and attention, particularly in relation to predictable versus novel information.

- Ernst Pöppel - Temporal Perception and Attention

Pöppel, E. (2004). “Lost in time: a historical frame, elementary processing units and the 3-second window.” Acta Neurobiologiae Experimentalis, 64(3), 295-301.

Pöppel’s work on temporal perception suggests that the brain processes information in small, discrete time windows, during which it can filter out predictable details and focus on novel or unexpected information. This temporal segmentation allows the brain to efficiently manage cognitive resources by minimizing attention to redundant details.

- Antonio Damasio - Emotion and Decision-Making

Damasio, A. R. (1994). Descartes’ Error: Emotion, Reason, and the Human Brain. New York: G.P. Putnam’s Sons.

Damasio’s research integrates emotion into the decision-making process, highlighting how emotional salience can draw attention to specific details, particularly those that are unexpected or carry significant personal relevance. This work complements neuroeconomic perspectives on how attention is directed toward certain details while others are ignored.

These authors and studies provide a theoretical and empirical foundation for understanding how the brain processes predictable versus novel information, particularly in the context of decision-making, attention, and pattern recognition. Their work supports the idea that predictable details may often be deprioritized in favor of those that offer new, salient information.